In this blog, we’ll walk through a strange case of the Intune Policy Tattooing Issue (Yes, a new tattooing issue), where every deleted setting refused to be removed from ALL devices. We’ll examine how we reproduced the behavior, what we expected to see in the SyncML traces and event logs, what actually happened on the device, and how something hidden caused the tattooing issue.

Policy Tattooing

You configure a policy, assign it to a group, and everything applies exactly as intended. Weeks later, you decide to unassign the group or even delete the policy entirely (Why? because you can). Logically, those applied settings should disappear from the device once it syncs with Intune. Instead, they are still left behind on all devices. The device continues to enforce a configuration that no longer exists. That is what’s known as policy tattooing.

A configuration “tattoos” itself onto the device because Intune never sends the Delete command that would normally clean it up. By default, when you remove or unassign a policy, Intune sends a Delete operation via SyncML.

The device receives the Delete instruction and removes the associated CSP nodes or registry keys. The process is automatic and invisible. But for one tenant/customer, that entire cleanup step had stopped working, and not just for one policy, but for ALL of them. It sounded impossible, but it was real. Sometimes, an old tattooing problem you thought was gone comes back in full force.

How the Policy Tattooing started

The initial report came from a tenant where every configuration change left something behind. When settings were deleted or policies unassigned, the old configurations didn’t disappear. Even after a policy was removed entirely, the corresponding registry values remained tattooed on the client.

That’s where the investigation began. Initially, the assumption was straightforward: perhaps one policy was misbehaving. Possibly a deployment bug, a version mismatch, or a CSP that didn’t interpret deletes properly. But after multiple tests, it became clear that this wasn’t limited to a single policy or device. Every policy in that tenant was affected. Every single policy had the tattooing issue!

So we decided to reproduce the issue under controlled conditions and capture exactly what was happening on the wire.

Building the Policy Tattooing test

To reproduce the problem, we chose an Edge configuration policy. Edge settings are easy to trace, they map directly to registry keys, and they provide visible results during testing. We started fresh with a clean virtual machine enrolled in Intune and prepared the test plan:

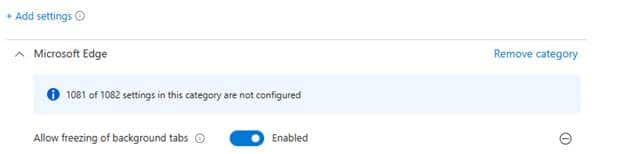

- Create a policy with a single Edge setting and sync the device.

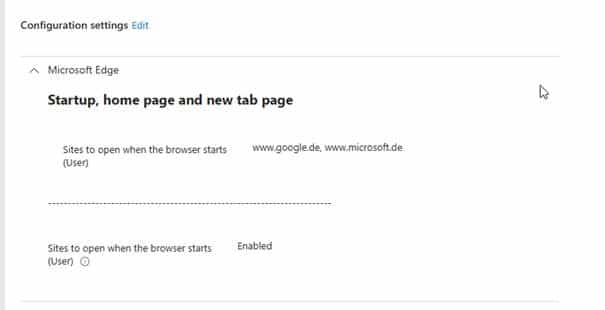

Once the device is synced, the new policy indeed showed up, as expected:

- Add a second setting to the same policy, and remove the first setting that was successfully deployed to the device before.

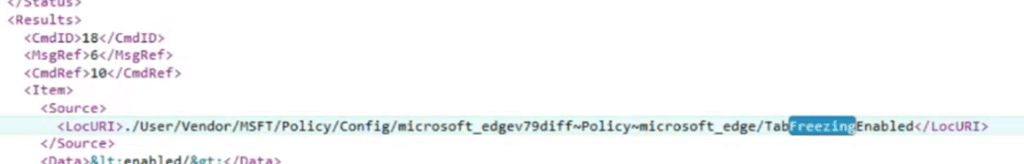

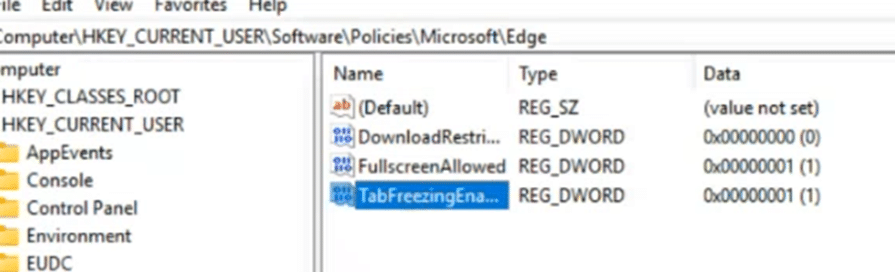

- Watch the device’s behavior in real time using the SyncML viewer and the DeviceManagement-Enterprise-Diagnostics-Provider event log. We noticed that the tab-freezing setting indeed showed up on the device

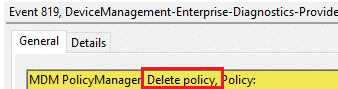

If everything worked normally, we would see a ‘Delete’ command in the SyncML trace when the first setting was removed, and an Event ID 819 in the event log indicating that the device had deleted the configuration locally.

That’s what should have happened…. But we ended up with some nice Policy Tattooing

The Next Step to fixt the Policy Tattooing issue

The first Edge setting, “RestoreOnStartup,” was removed, and a new one, “TabFreezing,” was added. The device synced successfully, picked up the new setting, and applied it right away.

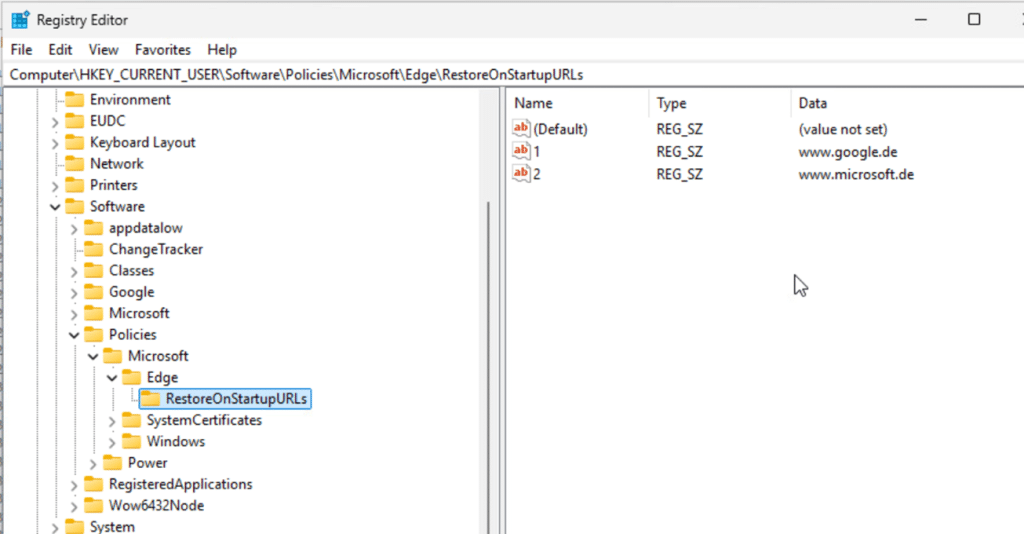

But the removed setting stayed AKA was still tattooed.

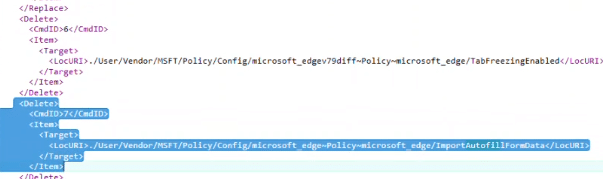

Opening the SyncML viewer confirmed the suspicion. The trace contained Add, Replace, and Get commands. The expected Delete operation was nowhere to be found.

The event logs matched what we saw in SyncML:

- Event 814 entries appeared (Add/Replace),

- Event 819 (Remove) NEVER showed up.

Even after several forced syncs, the registry key for the deleted setting remained untouched. To rule out any weird behavior, we repeated the test with a different set of Edge settings and a brand new policy , same result. New Intune settings came down. The old policies were never removed if we removed them from the policy. From there on, we tested the same thing, but this time setting the policy to the opposite of what it was (so it will send out a replace command)

As expected, the replace command was successfully sent to the device, and the setting was flipped to disabled. At this point, we had a full reproduction. Intune wasn’t sending “Delete operations” to the all devices, meaning that every configuration removed from a policy simply stayed tattooed on the device… until they were wiped. Fun issue, right?

Gathering the proof

We collected every trace we could get our hands on (we even video recorded it)

- SyncML logs clearly showed Add, Replace, and Get commands, but no Delete.

- MDMDiagReport confirmed that the CSP nodes for the removed settings still existed.

- Event Viewer contained 814 entries for new settings, but 819 was missing across the board.

- Intune audit logs proved that Intune itself thought the settings were removed correctly, the Patch entries showed settings deleted from the policy.

- Policy reports showed no conflicts. From Intune’s perspective, everything looked clean and healthy.

The evidence pointed in one direction: the client wasn’t at fault. The delete message simply wasn’t being sent from the service side; with it, the policy was tattooed to the device.

Cross-checking the Policy Tattooing in a clean tenant

To ensure this wasn’t a global issue, we performed the same experiment in a different tenant, using the same steps and settings. This time, everything behaved exactly as expected.

When a setting was removed, the SyncML trace contained a Delete command. The event log recorded Event ID 819. The registry keys vanished from the device immediately.

That ruled out any product-wide defect. The issue was isolated to one tenant. Something within that tenant was blocking deletion altogether.

Escalation to Microsoft

With both sets of traces, one from the failing tenant and one from the working tenant, we reached out to Microsoft. Within a very, very short time, they spotted the problem.

Deletion in that tenant was “suspended“. The system wasn’t failing; it was waiting.

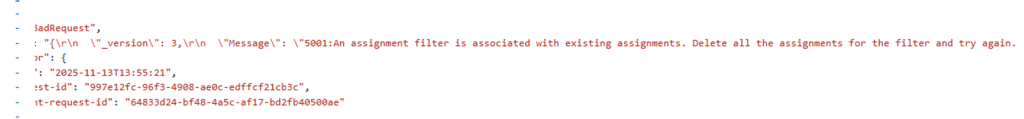

A single older policy was still assigned to a group that contained an invalid assignment filter, even when the assignment filter was NOT visible in Intune.

The filter had been deleted long ago, but the backend was still trying to evaluate it. Because that reference was broken, the entire deletion pipeline for ALL the policies (yes, ALL other policies) was frozen in place. This small detail had massive side effects. Any operation requiring the delete command was effectively paused until the invalid filter was resolved.

The reason this could even happen goes back years. In an earlier version release of Intune, it was possible to remove the assignment filter even while it was still attached to a policy assignment. The moment Microsoft noticed that, they fixed that, and from then on, it wasn’t possible anymore to delete a filter that was still assigned.

But tenants that had done this in the past carried those orphaned filter references quietly in the background. This tenant was one of them.

The fix

Once the engineering team identified the specific policy, we changed the invalid filter just by opening that specific policy, Microsoft mentioned, selecting a new filter, and saving it.

Once the assignment filter was changed, for the first time in months, the SyncML trace included Delete commands. The event logs finally showed Event 819 entries for each removed setting.

The registry keys that had remained tattooed for months on the devices were gone within seconds. Intune’s deletion process had resumed normal operation.

Closing thoughts

What began as a simple complaint about stubborn settings turned into a deep dive into Intune’s deletion pipeline. The problem wasn’t with the devices or the policies themselves, but with an old, forgotten assignment filter reference that had been left behind in the tenant.

Once that single broken filter link was removed, everything returned to normal. Intune started sending Delete commands again, Event 819 appeared in the logs, and devices cleaned themselves up automatically.

It was a reminder that even in a modern cloud service, legacy artifacts can cause strange behavior years later. But once identified, the fix was simple, remove the ghost of the past and let Intune do its job.